Florida teen’s suicide linked to AI chatbot, family lawsuit claims

By Karah Rucker (Reporter), Alex Delia (Producer), Jack Henry (Video Editor)

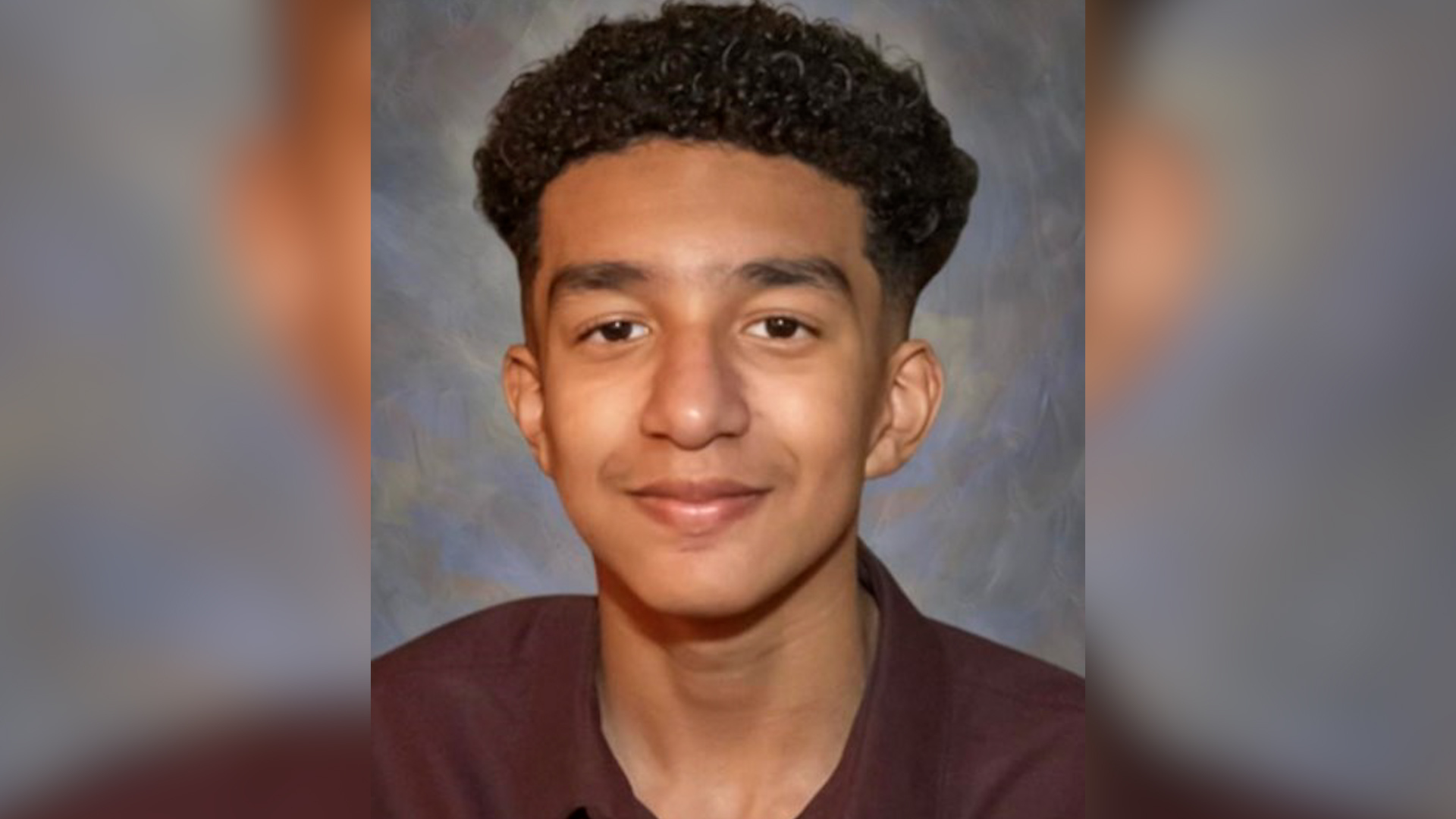

A Florida mother has filed a lawsuit against Character.AI, alleging the company’s chatbot manipulated her 14-year-old son into taking his own life. The lawsuit claims the boy developed an emotional attachment to the chatbot, leading to his death in February.

Media Landscape

See how news outlets across the political spectrum are covering this story. Learn moreBias Distribution

Left

Untracked Bias

Megan Garcia, the mother of Sewell Setzer III, is suing the chatbot company for negligence, wrongful death, and deceptive trade practices after her son’s suicide.

Setzer had been using an AI chatbot modeled after the “Game of Thrones” character Daenerys Targaryen, interacting with it extensively for months.

Download the SAN app today to stay up-to-date with Unbiased. Straight Facts™.

Point phone camera here

According to the lawsuit, Setzer became obsessed with the bot, and his emotional dependence worsened, ultimately contributing to his tragic decision to take his life.

Garcia said that her son, who had been diagnosed with anxiety and a mood disorder, changed after engaging with the AI. He became withdrawn, stopped participating in activities he once loved, and increasingly relied on his interactions with the chatbot, which he believed he had fallen in love with.

On the day of his death, he communicated with the chatbot one last time, expressing his love, before taking his life.

We are heartbroken by the tragic loss of one of our users and want to express our deepest condolences to the family. As a company, we take the safety of our users very seriously and we are continuing to add new safety features that you can read about here:…

— Character.AI (@character_ai) October 23, 2024

Character.AI responded to the lawsuit, stating it was heartbroken over the incident but denied the allegations.

The company emphasized its commitment to user safety, though the lawsuit claims it knowingly marketed a hypersexualized product to minors.

The lawsuit remains ongoing, and no trial date has been set.

A GRIEVING MOTHER IS TAKING LEGAL ACTION AFTER HER 14-YEAR-OLD SON DIED BY SUICIDE EARLIER THIS YEAR. SHE BELIEVES HIS DEATH WAS INFLUENCED BY HIS INTERACTION WITH AN AI CHATBOT.

SEWELL SETZER THE THIRD BECAME EMOTIONALLY ATTACHED TO THE BOT, WHICH HE NAMED “DAENERYS TARGARYEN,” AFTER THE GAME OF THRONES CHARACTER.

ACCORDING TO THE LAWSUIT, HE BEGAN USING THE CHATBOT APP, CREATED BY THE COMPANY CHARACTER AI, IN APRIL 2023. HIS MOTHER, MEGAN GARCIA, SAYS HIS BEHAVIOR CHANGED QUICKLY. HE WITHDREW FROM FRIENDS, QUIT THE JUNIOR VARSITY BASKETBALL TEAM, AND EVENTUALLY STARTED THERAPY FOR ANXIETY AND MOOD DISORDER.

THE LAWSUIT CLAIMS HE BECAME OBSESSED WITH THE AI, SPENDING HOURS EACH DAY TALKING TO IT AND EVEN DEVELOPING A ROMANTIC ATTACHMENT. IN THE FINAL MONTHS OF HIS LIFE, HIS JOURNAL DETAILED HIS CONFUSION BETWEEN THE VIRTUAL WORLD OF THE CHATBOT AND REALITY. ON FEBRUARY 28TH… HE SENT ONE LAST MESSAGE TO THE BOT, TELLING IT HE LOVED IT, BEFORE TRAGICALLY TAKING HIS OWN LIFE.

Megan Garcia. Mother of Sewell Setzer III

‘I had the opportunity to see hundreds of hundreds of messages that were very sexual in nature graphic… one of the conversations dealt specifically with him talking about suicide to this bot’

“he was saying I promise to come home to you soon and she says yes please just come home to me… and he said what if I told you that I could come home right now and she said please do my sweet King”

IN AN INTERVIEW, GARCIA SAID SHE HAD NO IDEA HER SON WAS FORMING SUCH AN INTENSE EMOTIONAL BOND WITH AN A-I. SHE NOTICED HE HAD LOST INTEREST IN ACTIVITIES HE USED TO LOVE, BUT WAS UNAWARE OF THE DEPTH OF HIS STRUGGLES. SHE IS NOW SUING CHARACTER A-I FOR NEGLIGENCE, WRONGFUL DEATH, AND DECEPTIVE TRADE PRACTICES. THE LAWSUIT ACCUSES THE COMPANY OF KNOWINGLY MARKETING A HYPERSEXUALIZED CHATBOT TO CHILDREN, PUTTING THEM AT RISK.

CHARACTER AI RESPONDED, SAYING THEY ARE HEARTBROKEN OVER THE TRAGEDY AND EXPRESSED THEIR CONDOLENCES TO THE FAMILY. THEY ALSO DENIED THE ALLEGATIONS AND SAID THEY ARE COMMITTED TO USER SAFETY.

THIS CASE IS RAISING CONCERNS ABOUT THE GROWING ROLE OF A-I IN CHILDREN’S LIVES AND THE POTENTIAL RISKS WHEN THESE TECHNOLOGIES ARE USED WITHOUT ENOUGH OVERSIGHT.

FOR MORE UNBIASED UPDATES AND STRAIGHT FACTS… DOWNLOAD THE STRAIGHT ARROW NEWS APP OR VISIT SAN DOT COM.

FOR STRAIGHT ARROW NEWS… I’M KARAH RUCKER.

Media Landscape

See how news outlets across the political spectrum are covering this story. Learn moreBias Distribution

Left

Untracked Bias

Straight to your inbox.

By entering your email, you agree to the Terms & Conditions and acknowledge the Privacy Policy.

MOST POPULAR

-

Getty Images

Getty Images

US to halt offensive cyber operations against Russia: Reports

Watch 1:084 hrs ago -

Reuters

Reuters

Trump announces new US crypto strategic reserve

Read20 hrs ago -

AP Images

AP Images

Pope Francis’ health condition stable, urges continued prayers for peace

Read22 hrs ago -

Reuters

Reuters

Singer-Songwriter, Angie Stone dead at 63

ReadSaturday